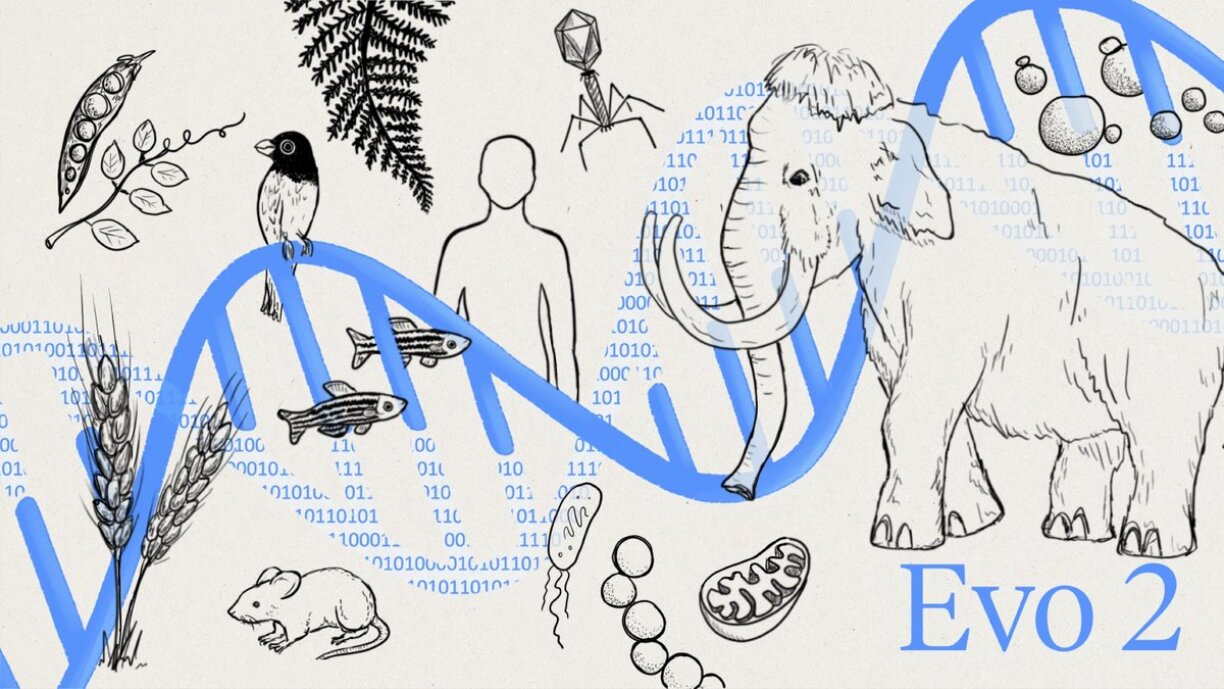

A huge leap in the world of biomolecular sciences, as Nvidia in collaboration with Arc Institute unveiled their new Evo-2 model, the largest artificial intelligence model in the field. It was trained on 9 trillion nucleotides – the foundation of DNA and RNA – spanning the three domains of life: Bacteria, Archaea, and Eukarya. Patrick Hsu, co-founder of the Arc Institute and co-senior author of the Evo 2 research, said “Evo 2 has a generalist understanding of the tree of life that’s useful for a multitude of tasks, from predicting disease-causing mutations to designing potential code for artificial life”.

One of the most exciting aspects of the model is that it has the capability of generating entirely new genomes, which poses the question: When will we see the return of mammoths?! Jokes aside (not that much of a joke really), it will likely allow us to discover solutions to problems in healthcare and environmental science that we have yet to conceive.

OpenAI has unveiled ChatGPT-4.5, its most advanced and knowledgeable model to date. The new iteration promises more natural interactions and enhanced emotional intelligence. A major criticism of large language models, hallucinations – where the AI generates inaccurate or misleading information – has reportedly been significantly reduced. According to OpenAI, GPT-4.5 is 10 times more computationally efficient than its predecessor. Unlike previous models, GPT-4.5 is not a reasoning model, so in terms of scientific results it does not score as high on benchmark tests.

However, this model has been given a stronger emotional intelligence component, allowing it to better understand context, tone, and user intent. This makes interactions feel more natural, fostering deeper engagement in conversations. Additionally, GPT-4.5 has improved adaptability, enabling it to respond with greater sensitivity in emotionally charged discussions. OpenAI also highlighted advancements in multilingual comprehension, making the model more accessible to users worldwide. Currently available as a research preview, pro users will gain access next week, while free users will have to wait.

As always, that was not the only release from the American tech company, they rolled out their new “Deep Research” feature which works as an autonomous research assistant. Research that would take a human hours, can now be done in minutes. It scours the internet and analyses sources, providing an all-encompassing answer from relevant sources that can be used in all fields.

Elsewhere, Google released “co-scientist”, a scientific research assistant that performs better in the academic realm. It helps researchers generate original hypotheses and research proposals, which will undoubtedly facilitate and accelerate future scientific discovery and research. Remarkably, the assistant reached the same conclusion in 48 hours which took scientists almost a decade.

The race to create the most powerful model is hotting up at a rapid rate, and the addition of Chinese competitors has caused a stir in Silicon Valley. As a response this month xAI released Grok 3, dubbed “scary smart” by Elon Musk, which is their most powerful model so far and performed incredibly well on all the industry standard tests.

Sam Altman predicted that by the end of the year, AI will topple all human software developers and will prevail as the ultimate programmer, allowing human developers to concentrate on more complex software design. Enter Anthropic’s Claude 3.7 Sonnet. The first hybrid reasoning model on the market as it also integrates Claude Code, which is now the leading agentic coding model on the market.

Progress in China is also thriving with ByteDance releasing their new video model named Goku. It is incredibly realistic and the results are probably more impressive than some of the video generation models in the West. All of the videos below were produced using the model. They also released Kimi 1.5 which is their Large Language model, it also performed very well on the industry standard tests.

Ever had brilliant thoughts while driving but couldn’t jot them down? Soon, that might change. Meta has introduced “Brain2Qwerty”, a groundbreaking study featuring a deep learning model capable of decoding sentences directly from brain activity. It sounds like an Arthur C. Clarke idea but Meta scientists are seemingly close to discovering a tool that, basically, reads your thoughts. It does still have quite a high error rate with 32% of the characters being wrong.

Figure have just unveiled their general purpose robot “figure”, using a new Vision-Action-Language model called Helix. It is capable of handling almost any type of household item and can distinguish them using the technology, even when having never encountered the object before. They respond to voice commands and can work with other figure robots to solve problems together. It might not be too long before people start having robots at home but this will likely see more factory work being automated by humanoid robots.

As always, it was a busy month in AI and what the rest of 2025 has to offer still remains to be seen, but the speed at which new models and systems are being released is nothing short of incredible. The race to Artificial General Intelligence is well and truly underway and progress is not slowing down.

That is why you should stay up to date with all things Artificial Intelligence, and you can read the other monthly reviews here.