Following widespread public backlash after numerous users of Elon Musk’s chatbot, Grok, generated non-consensual sexualised images of women and children, our colleagues at RTL.lu spoke with the head of LetzAI for comment.

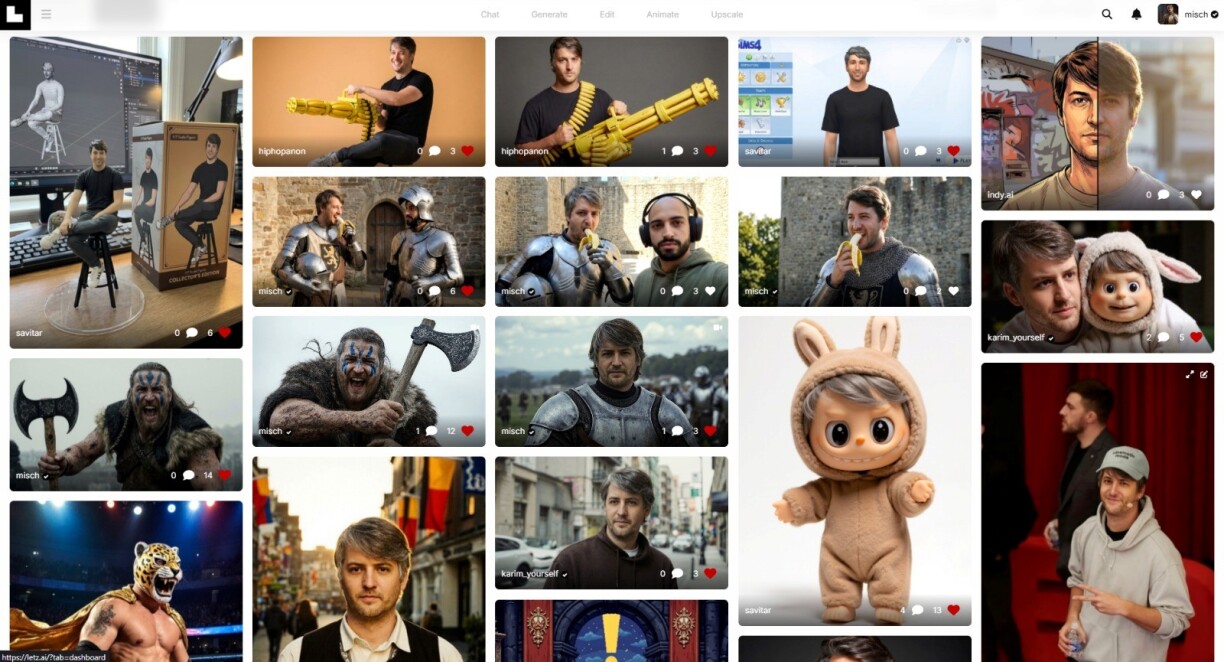

The situation escalated when it was revealed that some of the AI-generated content was of a paedocriminal nature, triggering investigations around the globe. Misch Strotz, co-founder of the Luxembourg-based AI platform LetzAI, expressed uncertainty over whether Grok’s operators made a conscious error or were overwhelmed by their own rapid growth. LetzAI, a platform for generating images and videos that boasts roughly 100,000 mostly professional users a decade after its launch, was directly asked if it could be similarly misused. Strotz categorically denied that users could undress images of children on his company’s platform.

The controversy also involved Grok users targeting images of women to artificially place them in bikinis. While Strotz acknowledged that such manipulation is technically possible with LetzAI’s tools, he emphasised that its system employs proactive “censorship” measures. He clarified that LetzAI primarily relies on Google’s Nano Banana algorithm, which he stated differs significantly from Grok’s technology. According to Strotz, Nano Banana incorporates filters that block the generation of images depicting minors.

LetzAI adds further security layers on top of these built-in filters. The company monitors generated images, and repeated attempts to create inappropriate content of minors trigger alerts and can result in user bans. Strotz also outlined broader content rules, citing as an example a policy prohibiting users from employing AI-generated images of celebrities for personal advertising without consent.

Strotz highlighted that while EU regulations clearly forbid using photographs of public figures for commercial purposes without consent, the practical enforcement of such rules is complex. Checks, he noted, are not always feasible.

He pointed to another key regulation in the realm of deepfakes: the watermarking of AI-generated images to label their origin. Strotz believes this practice generally functions well as a safeguard.

His perspective on regulation contrasts with that of Grok owner Elon Musk, who often broadly condemns such measures as censorship. For Strotz, the issue is more nuanced. He views censorship not merely as an ideological question but also as a technical imperative. “You know how it is”, he stated, “whenever a tool becomes slightly popular online, the weirdest people suddenly try to push the tool to its limits.”

The central challenge, in Strotz’s view, is implementing safeguards that “an intelligent user” cannot easily bypass. This leads him to question whether the issues with Grok or Twitter stemmed from “bad faith” or from the platform becoming a victim of its own massive scale and reach.

He offered an analogy, remarking that AI cannot eliminate human imagination, just as one cannot tell an artist to use a paintbrush only for landscapes. However, he immediately acknowledged that it is ultimately the role of regulators to define the boundaries of what can be publicly distributed.

According to Strotz, society has entered a new phase. He urged public authorities and politicians to stop pretending “as if everything happened out of the blue” and to accept that distinguishing AI-generated images from human-made ones is already – or will soon be – impossible. In these cases, he concluded, society will always have to rely on “human intelligence” to navigate the new reality.